Learning Text Preprocessing in Python — Machine Learning

Originally published at https://www.niit.com/india/.

Generally, a data scientist spends 70–80% of his time cleaning and preprocessing the data because most of the time data is collected from different sources and stored in a raw format making it infeasible for further analysis as most of the machine learning models need information in specific format to execute the what is Preprocessing of Data algorithm. That’s why it is important to structure the data as per the combination of your approach and domain.

For achieving better results the data sets should be formatted in a way to execute more than one machine learning algorithm so that it is possible to choose the best out of them.

In this article, you will read about different preprocessing data techniques and their implementation in Python.

What is Preprocessing of Data?

The steps needed to transfer human language to machine-readable format for further processing, or transforming raw data sets into predictable and analyzable format before feeding it to algorithm as per the task is known as preprocessing of data.

There are no fixed steps for preprocessing of data. You need to use steps based on your requirement and dataset. You need to be very careful while choosing preprocessing steps or techniques as it plays an important role in deriving results.

Understanding Different Text Preprocessing Techniques

For model building preprocessing is performed on text data so that it can be readily accepted and assessed in an algorithm. Some of the preprocessing techniques are:

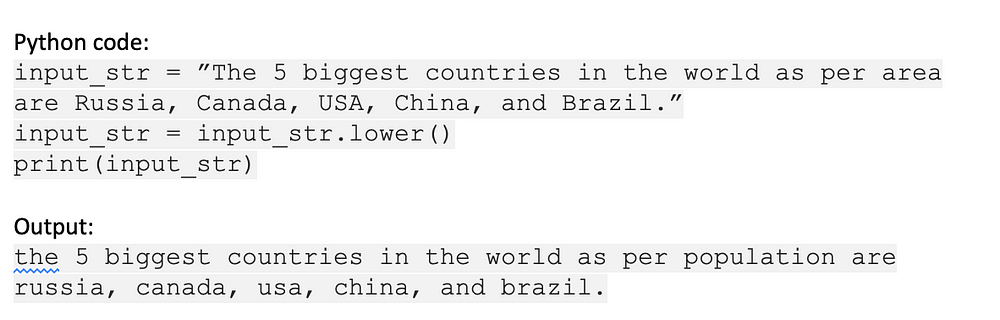

1. Text Lowercase

Lowercasing is one of the simplest and easiest forms of text preprocessing. In this word with different cases all map to the same lowercase form to reduce the vocabulary size of our dataset.

It is known that differences in the capitalization of text give different output or no output at all. So, lowercasing helps in maintaining consistency of output. For example, you are searching for “sales”, but no results were found because it was indexed as “SALES”. If your dataset is not very large then lowercasing is a great way to solve these sparsity issues.

While lowercasing is a great standard practice, sometimes capitalization is also important. Especially in source code files because lowercasing makes them identical which creates an issue in predictive features.

For example:

2. Remove Numbers

In this step, the irrelevant numbers are removed or converted into a textual form for fast and easy assessment of data in the algorithm. Sometimes these numbers mislead the prediction as they have no numerical value but this completely depends on your task. Generally, the regular expression is used for the removal of numbers from the dataset.

For example:

3. Remove Punctuation

Removing punctuations is an important step to reduce variation to not have different forms of the same word. In case you don’t remove them, then Yes. Yes, Yes! will be treated differently and possess different meanings.

For example:

4. Remove Whitespaces

In this process, the join and split function is used to remove all the white spaces in a string.

For example:

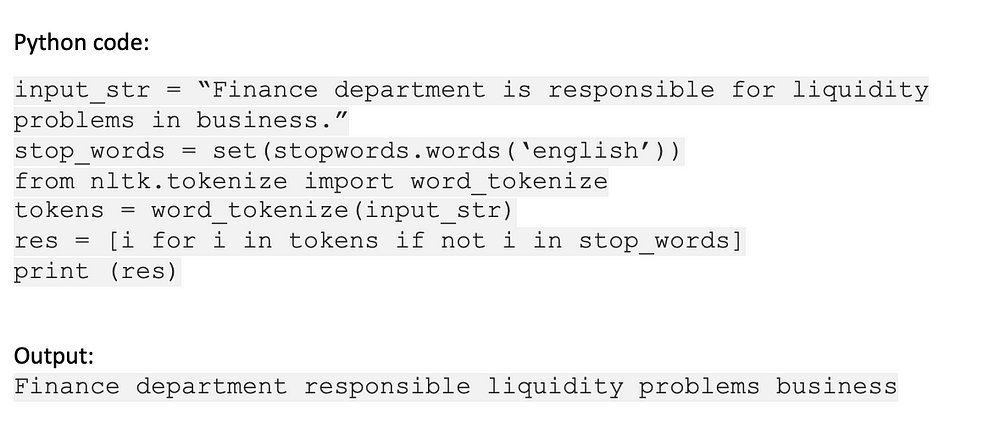

5. Remove Default Stopwords

Stop words are those words that you can remove from a sentence without changing its meaning. Stop words are low informative, not so important words that are removed for effective search and reduce inflection.

Stop words help to classify the data according to the level of information provided. The low informative words are removed so that you can focus on important words. This increases the speed and accuracy of your search. For example, is, am, are, and stop words. You can use the NLTK library tool kit which has a set of stopwords to remove stopwords from our text.

For Example:

5. Stemming

In the Stemming process, the ends of words are chopped off to transform them to root form. For Example, the words “fumble”, “fumbled” and “fumbles” are converted into fumble only so that inflection in words is reduced. Here root word may not be an actual root word but a canonical form of the actual original word. It makes your search easier and standardizes your vocabulary.

For example: Using Porter Stemming Algorithm

6. Lemmatization

Lemmatization is similar to the Stemming process. It also reduces word inflections and transforms them to base form. The only difference between lemmatization and stemming is that lemmatization does not chop off end inflections simply but uses specific knowledge bases to get the correct base word that belongs to a language. Compared to stemming output in lemmatization makes more sense.

Various libraries used in Lemmatization are NLTK (WordNet Lemmatizer), spaCy, Stanford CoreNLP, Pattern, DKPro Core, gensim, Memory-Based Shallow Parser (MBSP), TextBlob, Apache OpenNLP, Apache Lucene, Illinois Lemmatizer, and General Architecture for Text Engineering (GATE).

For example Lemmatization using NLTK

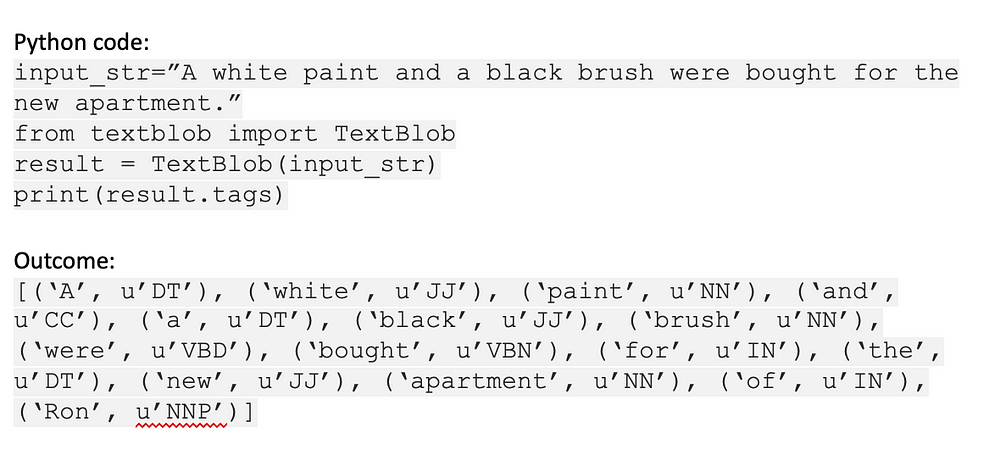

7. Chunking

In the chunking process, the constituent parts of the speech like nouns, verbs, adverbs, adjectives, etc are identified and linked to noun groups, verb groups, phrases, etc having discrete grammatical meaning.

Some of the chunking tools are NLTK, Apache OpenNLP, General Architecture for Text Engineering (GATE), FreeLing, TreeTagger chunker, etc.

For example:

Endnote

Text preprocessing is an important step before feeding data into a machine-learning algorithm because most of the algorithm needs to convert human language into machine language for better assessment or else results may vary.

Apart from the techniques shown in the article, many other steps are also used like URL removal, HTML tags, and many more. You must choose the right combination of steps for text preprocessing as per your dataset.

If you are interested in such analytical content then be sure to explore NIIT’s Knowledge Centre. With weekly updates, you would never be short of new ideas and insightful research on how to use technology to upgrade your professional self.